Sloth-bot

Sloth-bots are large autonomous robots that move incredibly/imperceptibly slowly. They reconfigure the physical architecture imperceptibly as a result of their interactions with people, over time. As the use of the space changes throughout the day, sloth-bots reposition themselves in anticipation of new interactions with the buildings occupants.

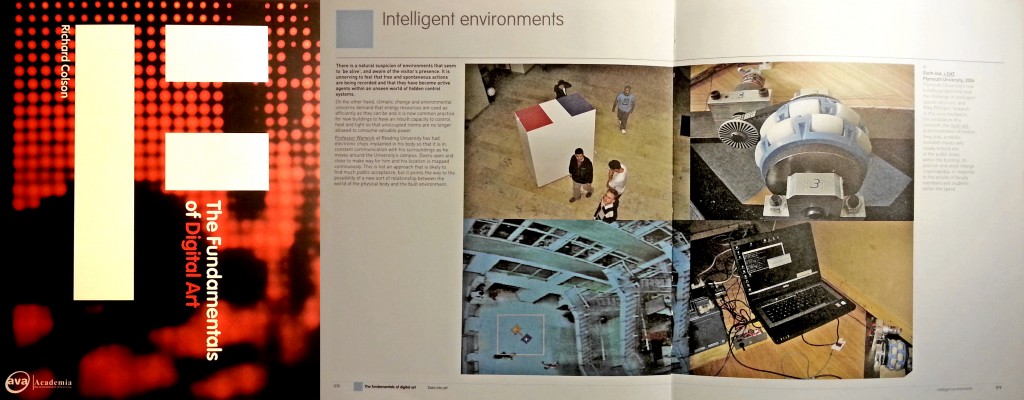

The Arch-OS Vision Tool provides dynamic data on crowd motion in public spaces. The information is acquired by four CCD cameras overlooking the space in Portland Square and is made available to any computer in the Arch-OS system. Motion information is stored every 40 ms as a data matrix using a double buffer scheme. The Vision Tool’s web server allows it to access the most up-to-date data in the form of a binary stream produced by a server-side CGI program. The Sloth-bot v1 has no vision system of its own. Its view of the world is restricted to the view the Arch-OS system has of the Sloth-bot v1, its only visual understanding of itself comes from an external view of its activities. Sloth-bot v1 has a dynamic dialogue with this server, continually updating itself on its location and the location of others around it.

The Sloth-bot has preprogrammed trajectories but also learns about he activities of the inhabitants of the building. It recognises a level of temporality that most users of the space are ignorant of. Operating at a speed somewhere between the ‘frozen-time’ of the buildings structure and the minute-by-minute ‘real-time’ of the buildings inhabitants, Sloth-bot v1 anticipates human behaviour and their response to its interactions in space. It learns that on the hour, nearly every hour people will pour out of the lecture theatre. It knows that around these times the flow of people is increased through the space and that, give or take, 5 minutes either side of the hour the flow will slow to a trickle. It knows that at around 5pm the trickle dies and that soon, depending on the time of year, it will not be able to see itself until the morning.

The Sloth-bot v1 reflects a dynamic real-time modelling of the processes within a building. Sloth-bot v1 enables a building’s occupants to reflect on the complexity of their own interactions as they move through time and space. In response occupants are able to better understand the complex relationships that exist between each other and their environment. In doing so the Sloth-bot v1 has the ability to enter into a direct, if slightly out of sync, dialogue with its inhabitants. It senses their presence and makes its awareness known, if only slowly.

Some mechanics:

Rather ice feature in Richard Colson’s The Fundamentals of Art.

Sloth-bots, build on robotic technology developed by Dr Guido Bugmann that was famously incorporated into Donald Rodney’s Psalms. This work was exhibited in the South London Gallery as a part of Rodney’s last exhibition, Nine Nights in Eldorado, in October 1997. In Psalms an autonomous wheelchair uses 8 sonar sensors, shaft-encoders, a video camera and a rate gyroscope to determine its position. A neural network using normalized radio band frequency (RBF) nodes encodes the sequence of 25 semi-circular sequences of positions forming the trajectory. http://www.tech.plym.ac.uk/soc/research/neural/research/wheelc.htm

The control system comprises a laptop PC 586 running a control program written in CORTEX-PRO, and linked to a Rug Warrior board built around the 68000 microcontroller.