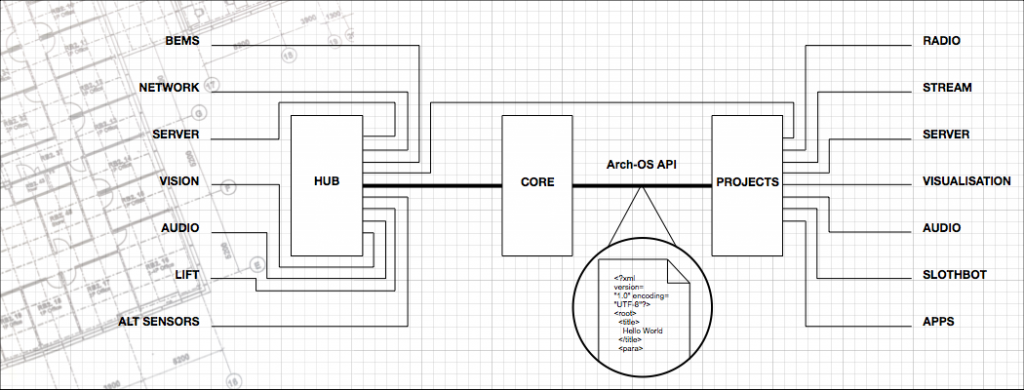

System

Arch-OS is an ‘Operating System’ for contemporary architectures, which has been developed to manifest the dynamic life of a building.

Arch-OS systems (integrated hardware and software) incorporate a range of embedded technologies to capture audio-visual and raw digital data from a building through a variety of sources which include:

- the ‘Building Energy Management System (BEMS);

- computer and communications networks;

- the flow of people and social interactions;

- ambient noise levels;

- environmental conditions.

This vibrant data is then manipulated and replayed through audio-visual projection systems incorporated within the fabric of the building and broadcast externally using streaming Internet and FM radio technologies. By making the invisible and temporal aspects of a building tangible Arch-OS creates a rich and dynamic set of opportunities for research, educational and cultural activities, as well as providing a unique and innovative work environment.

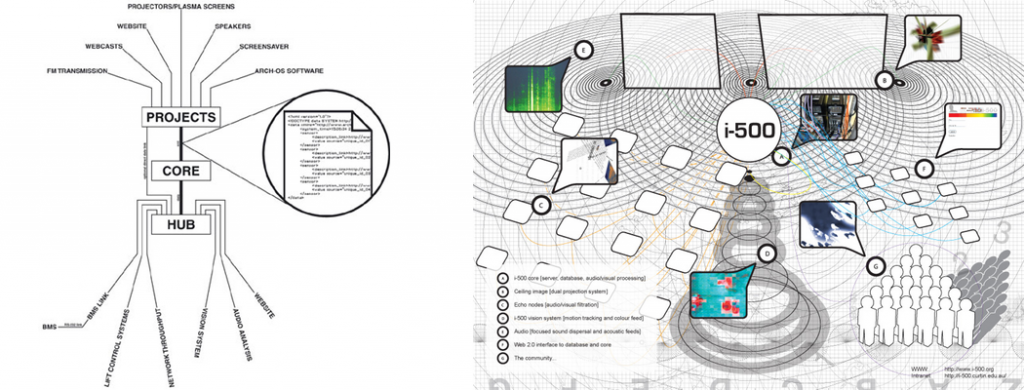

There have been two large scale system installs and multiple installations of Arch-OS components. The two significant system installations can be found in the Portland Square Building in Plymouth University, Plymouth, UK and the Curtin University Resources and Chemistry Research and Education Building, Perth, WA.

Portland Square and Curtin University Resources and Chemistry Research and Education Building.

There were two distinct system diagrams produced for these installations:

Original Arch-OS and i-500.

Arch-OS integrates the three technological levels of a Cybrid building. These are:

- [1] Interface: The Arch-OS infrastructure, networks, sensors and audio-visual playback systems… nervous system…

- [2] Core: The control centre for the Arch-OS, the central processor, the modeller and visualiser… brain…

- [3] Projects: The means of expression for the Arch-OS, commissions for artists, engineers and scientists… emotion…

[1] The ‘Interface’

the construction of the internal media networks and data collection devices. The interface (between the physical and the virtual) consists of a dedicated network that transports data from a range of sensors (cameras that monitor the ‘flocking’ of people, microphones to monitor ambient sounds, BMS information, network traffic data, lift location and movement) to the ‘Core’.

A: Building Energy Management System [BEMS]:

The Arch-OS BEMS tool measures the environmental changes within a building. It is essentially a large database of energy usage and environmental control. Arch-OS interrogates approximately 2000 sensors (in the Portland Square installation) of a standard industrial BEMS system. Utilising the standard Modbus serial device communications protocol, the Arch-OS-BEMS tool is purpose built Modbus master connected via a RS232 link to the BMS acting as the slave, ensuring the integrity of the BEMS security is maintained. Arch-OS can tap into a range of BEMS API’s or tailored ‘hacks’ can be built to suit older systems.

Arch-OS can select subsets of both digital and analogue sensors for real-time data monitoring. The data can be stored on the Arch-OS server for on-demand acquisition or exported directly to the users application in ODBC format. In both cases this is achieved via TCP/IP connections to the users application.

B: Vision:

The Arch-OS vision tool monitors the flow of people through the building, and provides a stream of visual data to the Core Arch-OS. In the Arch-OS vision system, the composite video signals of the cameras are pre-amplified, then sent to three PCs in the Arch-OS control room. Each PC acquires live images with a frame grabber card and processes them using a dedicated motion detection and tracking software.

Motion information is stored every 40 ms as a data matrix using a double buffer scheme. A web server runs on each PC enabling the user application to access the most up-to-date data in the form of a binary stream produced by a server-side CGI program.

Alternative solutions can be integrated such as the Kinect SDK Flash, open CV and Processing based vision systems

C: Audio:

The Arch-OS audio tool consists of an integrated recording, processing and playback system, which allows an evolving library of sounds, generative audio and live recordings to be played through a multi-speaker system (56 in the Portland Square development). The multi-speaker system provides a unique 3 dimensional matrix within the buildings which allows audio to be positioned at specific locations and paned around the space, through corridors and around atria.

The Curtin University install uses Audio Spotlight technology to focus beams of sound into specific areas within the building to ensure minimum sonic disruption.

Arch-OS audio can be controlled by the Core processing system (sounds tracking the flow of people captured on the Arch-OS vision system for instance), by the inhabitants of the building or through the internet to allow users to remotely orchestrate sounds within the space.

D: Network:

Arch-OS network tool monitors and harnesses the flow of data within the buildings computer networks, providing a rich source for audio-visual simulations.Arch-OS network tool monitors TCP/IP network traffic without interfering with the speed of the network or transgressing data protection legislation. Quantity and speed of traffic can be monitored as well as direct interactions with the other components of the Arch-OS system through the Arch-OS server.

The system also provides a range of streaming media output and direct access to the Arch-OS server to support interactions with the other Arch-OS elements.

[2] Core:

The control centre for Arch-OS, the central processor, the modeller and visualiser… brain…

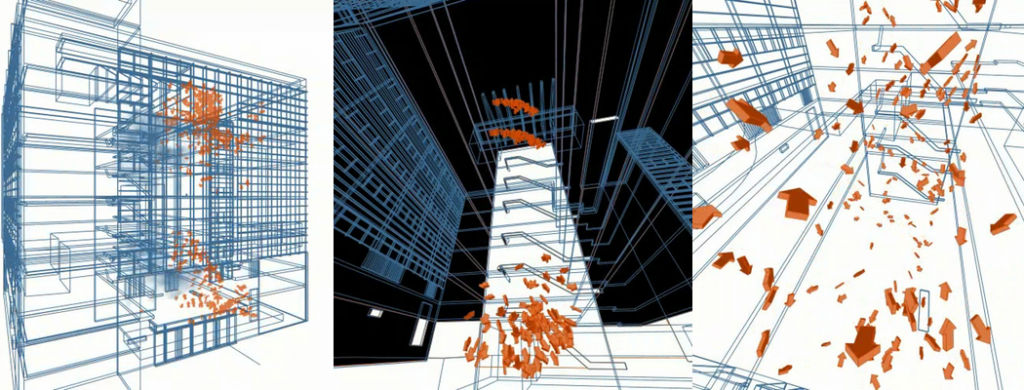

The Core processes and manipulates the dynamic data generated by the ‘interface’. The Core computer system uses a data engine which incorporate a range of interactive multimedia applications (video and audio processors, neural networks, generative media, dynamic visualisation and simulation software) which generate a dynamic 3D sonic model of the building and its activities.

The Core data model allows artists, scientists and engineers to manipulate and control the buildings media output which can be broadcast within and between each structure, and out over the internet.

[3] Projects:

Arch-OS Projects are curated and produced by i-DAT.